You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

{{ message }}

This repository has been archived by the owner on Mar 12, 2020. It is now read-only.

All benchmarks, analysis and links to official package implementations can be found in this repository

Mish also was recently used for a submission on the Stanford DAWN Cifar-10 Training Time Benchmark where it obtained 94% accuracy in just 10.7 seconds which is the current best score on 4 GPU and second fastest overall. Additionally, Mish has shown to improve convergence rate by requiring less epochs. Reference -

Mish also has shown consistent improved ImageNet scores and is more robust. Reference -

Additional ImageNet benchmarks along with Network architectures and weights are avilable on my repository.

Summary of Vision related results:

It would be nice to have Mish as an option within the activation function group.

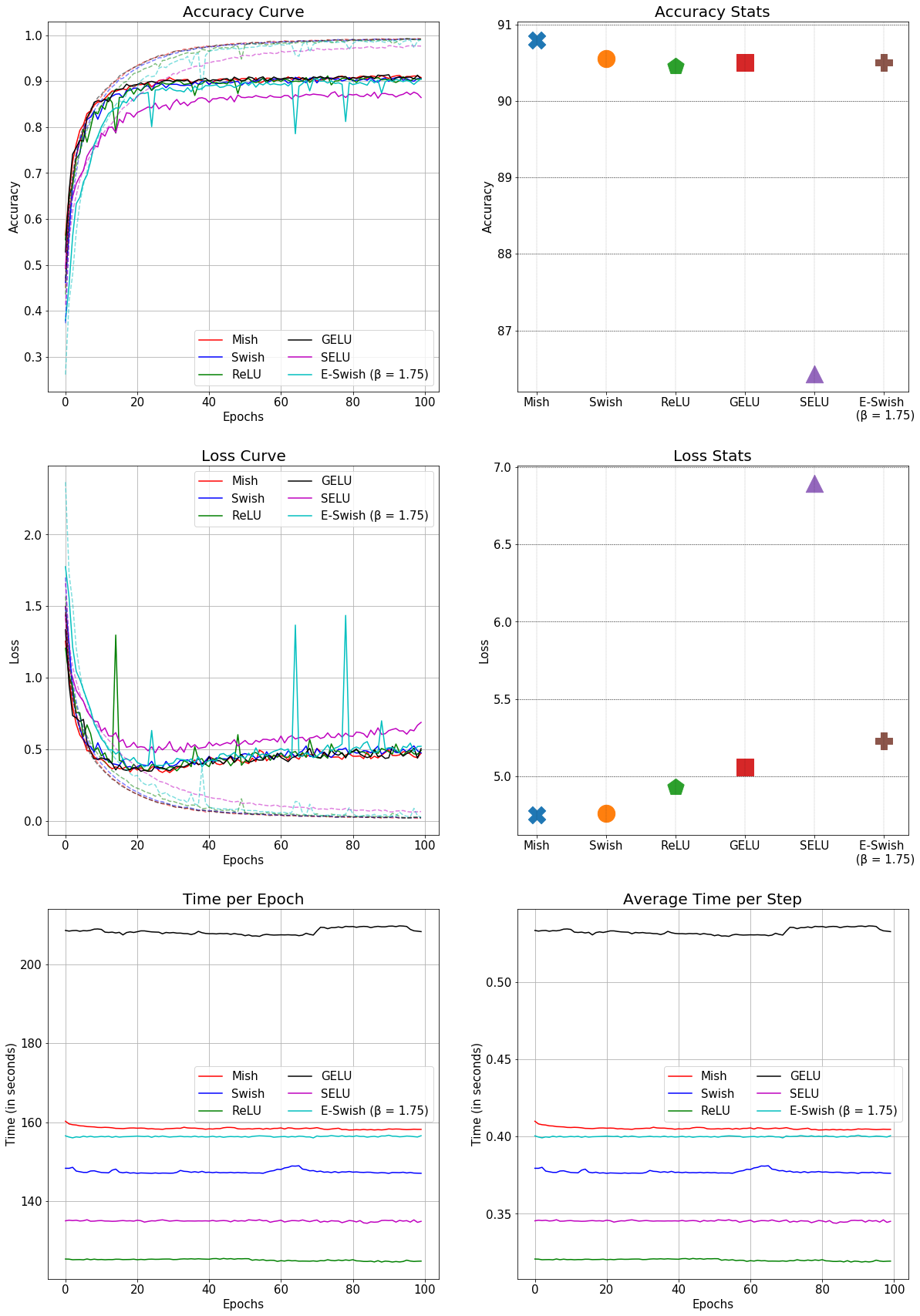

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10:

The text was updated successfully, but these errors were encountered:

Sign up for freeto subscribe to this conversation on GitHub.

Already have an account?

Sign in.

Mish is a novel activation function proposed in this paper.

It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

Mish also was recently used for a submission on the Stanford DAWN Cifar-10 Training Time Benchmark where it obtained 94% accuracy in just 10.7 seconds which is the current best score on 4 GPU and second fastest overall. Additionally, Mish has shown to improve convergence rate by requiring less epochs. Reference -

Mish also has shown consistent improved ImageNet scores and is more robust. Reference -

Additional ImageNet benchmarks along with Network architectures and weights are avilable on my repository.

Summary of Vision related results:

It would be nice to have Mish as an option within the activation function group.

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10:

The text was updated successfully, but these errors were encountered: